Necessity may be the mother of invention, but invention’s children are somewhat less predictable.

By Luke Sacher

One of the best movies I’ve seen in years is David O. Russell’s Joy, a biopic starring Jennifer Lawrence as Joy Mangano, inventor of the Miracle Mop, holder of over 100 patents and one of America’s top contemporary industrial entrepreneurs. “I watch somebody struggle with something,” she explains, “and it instantly triggers something in me. I start to think, ‘There is a better way. It’s going to help so many people.’ I become laser-focused on getting to that. I do not stop until I figure out the solution.”

Library of Congress

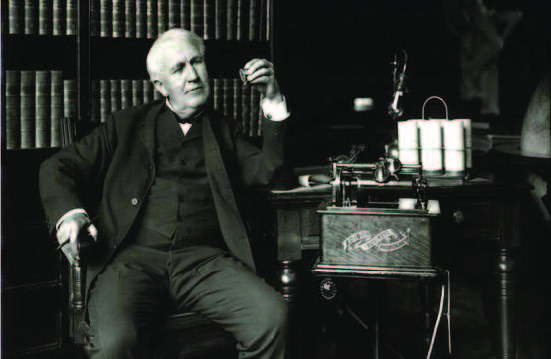

Thomas Edison said almost exactly the same thing: “I never pick up an item without thinking of how I might improve it. There’s a better way, I shall find it.” Obviously, comparing the hands-free mop to Edison’s phonograph, incandescent light bulb, stock ticker or motion picture camera is a false equivalency. But let’s give the lady credit where it’s due. She and the Wizard of Menlo Park are kindred spirits, sharing personality traits common to all inventors, including imagination, initiative, persistence and a vision of a future where the human experience is more efficient and enjoyable.

Edison (above) also famously noted that genius is “one percent inspiration and 99 percent perspiration.” And he was correct. Invention can be a sweaty, dirty and sometimes dangerous business, especially when short-term results are the driving motivator. If you think about it, this is the basic plot of every Roadrunner cartoon. Plus, invention is full of economic uncertainty, for it involves pushing knowledge forward into places where little or no knowledge exists. “We do not know what it is that we do not know,” economist Israel Kirzner once observed. Additionally, we do not know the true cost of finding out what it is that we do not know—or whether it is even worthwhile knowing. “That is where entrepreneurship comes in,” Kirzner concluded.

In other words, not only do inventors have to get the idea and execution right to gain traction, there must also be a sound business plan behind it, too. This is why the scientists, technologists and innovators who do succeed deserve our undying admiration.

That is, until their inventions start killing us.

Alas, history is littered with big ideas that turned out to be perfectly horrible in the long run. Their costs can be measured in human lives. Fortunately, there are many more small flashes of inspiration that blossomed into true game-changers. When confronted with the products and technologies that promise to change our lives for the better today, I find it helpful to take a look back at the good and the bad.

CAN DO

National Gallery of Art

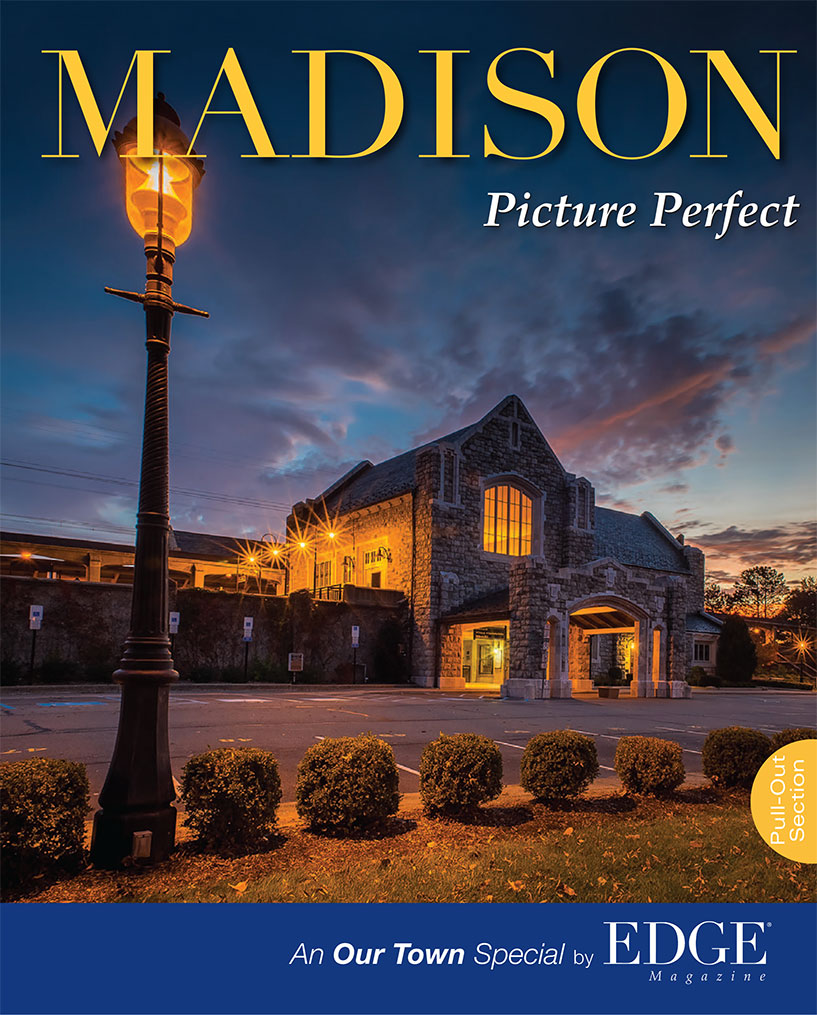

War, claimed the Greek philosopher Heraclitus, is the “father of all things.” We have war to thank for the tin can. In 1797, Napoleon Bonaparte (right) conscripted virtually every able man in France into the first modern mass army and launched it across Europe. A mob of more than one million undisciplined illiterate sans culottes, deployed from the English Channel to Switzerland, rolled over French opponents by sheer force of numbers. But an army marches on its stomach, and the Grande Armée spent more time foraging for food than fighting. On the eve of the first major battle against the Austrians, while consuming a “pot luck” dinner made from whatever his chef could scrounge up in the surrounding village, Napoleon decreed a massive prize to anyone who could devise a way to preserve and package rations for his troops. In 1810, after 15 years of experiment, Nicholas Appert claimed that prize—on the condition that he make his invention public. That year, he published The Art of Preserving Animal and Vegetable Substances. Appert lived in Epernay, the home of champagne. He modified the heavy bottles as storage containers by shortening the necks to widen the opening. He then filled them with various foods, placed them in boiling water for 30 minutes, and sealed them with corks and wire cages. Ironically, he had also accidentally invented pasteurization—30 years before Louis Pasteur made the scientific connection between heat and killing bacteria!

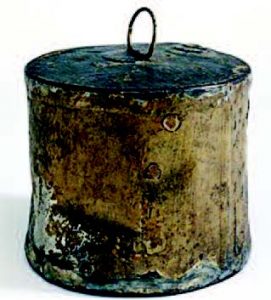

Science Museum of London

Meanwhile, across the Channel, a pair of Englishmen, Bryan Donkin (right) and John Hall, acquired the patent for a lighter, unbreakable container, the tin-plated steel can. In 1813, they built the world’s first commercial canning factory in London, and produced their first tin-canned goods exclusively for the Royal Army and Navy. Soon all the other European Empires adopted the tin can to provision the ranks of their own armies and navies. But there was just one fly in the ointment, so to speak. This advance in food preservation and portability was not without its setbacks. Early tin cans were crudely sealed with a 50/50 tin and lead alloy solder. Troops in the field and sailors on the high seas would customarily reheat their contents while still in the cans, exposing the lead alloy to acid and dissolving the lead in the food. It’s disconcerting to think that all of the soldiers and sailors in the armies and navies of Europe might have been half-crazy from lead poisoning during the mid-1800s. It might be part of the reason why the Crimean War (1853–1856) is well remembered for its “great confusion of purpose”

Upper Case Editorial

and “notoriously incompetent international butchery.”

Fortunately for the civilian population, canned foods were not mass-produced until the final decades of the 19th century. That happened after William Lyman invented the can opener in 1870, and the crude technique of soldering cans shut with globs of lead had been discontinued. Today, 40 billion cans of food are consumed in just Europe and the United States, according to the Can Manufacturers Institute. The tin can revolutionized how, what and when people could eat—not at all a small contribution to the growth and health of the global population.

THE COLOR PURPLE

Mauveine. Never heard of it? Think “mauve” and settle in for a roller-coaster ride through a century of innovation. It begins around 1800 with James Watt, whose improvements to the steam engine in the 1780s triggered the Industrial Revolution. William Murdoch, a research assistant at Watt’s steam engine works in Birmingham, England, realized that coal could be used for something other than engine boiler fuel: Heat coal up in a kiln and it emits flammable gases that could be collected, stored, and used as fuel for illumination. Eureka…gas light was invented. Within a few years, complete gaslight piping networks were built in London and other major cities in Great Britain.

Engraving by Edward Burton

What remains of the coal after the gases are cooked out of it? Coal tar. Sticky muck, reeking of ammonia. What to do with it? It was the Industrial Revolution, remember, so the short answer was throw it into the rivers. Enter a man from Glasgow named Charles Macintosh (left), a clothing and dye maker who needed the ammonia as a solvent and bleach. After a bit of fiddling, he managed to extract another solvent from the tar, naphtha, which turned out to be the perfect solvent thinner for a newly imported material from the Orient: rubber. He sandwiched the thinned rubber between two layers of fabric and in 1824 invented a game-changing raincoat, the Mackintosh!

People interested in chemistry began asking themselves what else might be extracted from coal tar. The British, who were facing a malaria crisis in their Far Eastern colonies, hoped that the only remedy for the mosquito-borne disease, quinine, might somehow be synthesized from coal tar. In 1856, a young British chemist named William Perkin (above right) tried for months without any luck to coax quinine from coal tar. One day, he tossed another of his failed solutions

A History of Chemistry

into the sink, where it mixed with water and turned bright Royal Mauve. By complete accident, he had stumbled upon the world’s first artificial aniline dye, which he named Mauveine. This “invention” made him a fortune thanks to the emerging middle class of the industrial age, who were keen to emulate Queen Victoria’s sartorial splendor. Ironically, Perkin’s luck appeared to run out when he attempted to persuade British academia and the investor class of the potential for progress and profits to be realized by creating a full industrial chemical industry. They were happy to keep building railroads and textile mills. So he approached academics and investors in Germany, where intellectual merit counted for more than family ancestry. By 1870, German chemical corporations like Agfa, BASF, Hoechst, and Bayer had left the rest of Europe in their wake, inventing everything from dyes to aspirin to artificial fertilizer to high explosives. All derived in one way or another utilizing coal tar.

Upper Case Editorial

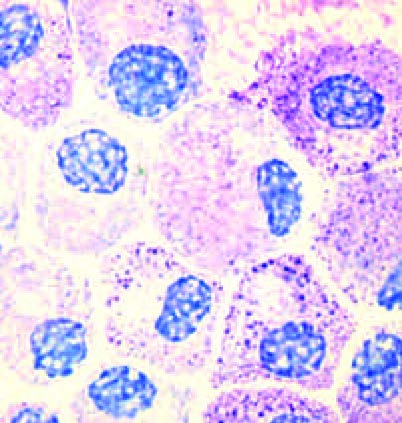

In 1882, German pathologist Paul Ehrlich accidentally spilled some mauveine dye into a petri dish only to notice that it selectively stained only one kind of bacteria. Ehrlich continued his research and, 17 years later, invented Salvarsan, the first chemical treatment for syphilis. Ehrlich became the father of a new science, which he named Chemotherapy. In 1908, he won the Nobel Prize for Medicine.

THAT CERTAIN GLOW

Upper Case Editorial

In 1898, Marie (left) and Pierre Curie discovered radium. In 1903, they were both awarded the Nobel Prize in Physics. Marie was the first woman ever to win the prize. Eight years later, she received a second Nobel, for isolating radium, discovering a second atomic

element, polonium (named after her home nation of Poland), and her groundbreaking research into “radioactivity” (a word she invented). By then, radium was being industrially refined and Marie Curie was convinced that it had potentially “magic” healing properties. Indeed, for many years, she wore a pendant around her neck containing pure radium.

Upper Case Editorial

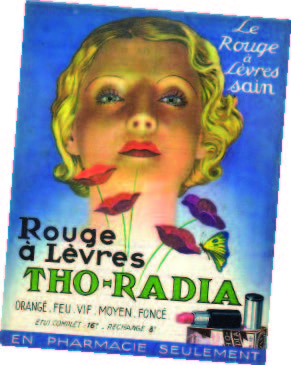

Madame Curie was not alone. Burk & Braun’s Radium Schokolade chocolate bar and Hippman-Blach bakery’s Radium Bread, both made with “radium enriched” water, were very popular and profitable. They were both discontinued in 1936. The Revigator stored a gallon of water inside a bucket with an enamel lining impregnated with radium. Its manufacturers claimed that drinking Revigator water could cure arthritis and reduce skin wrinkles. Toothpaste containing both radium and thorium (radium’s “grandfather” element) was sold by Dr. Alfred Curie. He was no relation to Marie or Pierre, but seized upon the opportunity to capitalize on their common name. Alfred Curie also manufactured Tho-Radia cosmetics, a line that ecompassed powders, creams and lipsticks that promised to rejuvenate and brighten ladies’ faces.

Early 20th century physicians produced radium suppositories, heating pads, and coins used to “fortify” single glasses of water. They were meant to treat rheumatism, weakness, malaise, and almost any health complaint for which their hypochondriac patients sought miracle cures. Before Viagra and Cialis, impotence was treated with radioactive “bougies” (the French word for candles)—wax rods inserted into the urethra—and athletic supporters containing a layer of radium-impregnated fabric. A popular treatment called the Radioendocrinator involved a small “portefeuille” holding a number of paper cards coated in Radium, worn inside underwear at night. Its inventor died of bladder cancer in 1949.

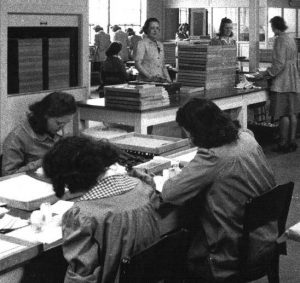

Soapbox Productions

Perhaps the most famous misuse of radium occurred between 1917 and 1936, when the U.S. Radium Corporation employed more than a hundred workers—mostly young women (below) just out of high school—to paint watch and clock faces with their patented “Undark” paint. As many as 70 women were hired to mix the paint, which was formulated of glue, water, and radium sulfide powder. Workers were taught to shape paint brushes with their mouths to maintain a fine point, and after work hours, painted their nails and teeth with the glow-in-the-dark paint for personal amusement. The company encouraged the young women to ingest the dangerous mixture, while its management and research scientists (who were aware of the danger) carefully avoided any exposure themselves. Five “Radium Girls” sued the U.S. Radium Corporation and won a large settlement in a landmark case involving labor safety standards and workers’ rights. There are no verifiable records of exactly how many suffered from anemia, inexplicable bone fractures, bleeding gums, and necrosis. Though many of the factory’s workers became ill, cases of death by radiation sickness were initially attributed to diphtheria, and even syphilis, in an attempt to smear their reputations.

CHEMICAL ATTRACTION

National Academy of Sciences

Every so often, a brilliant solution to one problem will create an even larger one. An inventor hailed as a hero during his or her time ends up being recast as a villain by history. Meet Thomas Midgley, Jr. (right), inventor of leaded gasoline and Freon. If you didn’t know he was a real person, you’d think he was a character from Kurt Vonnegut’s Cat’s Cradle. Over the course of his career, Midgley was granted over 100 patents, including those for tetraethyl leaded gasoline (commonly called TEL and trademarked as Ethyl), and the first chloroflurocarbons (commonly called CFCs and trademarked as Freon).

Midgley began working at General Motors in 1916. In 1921, he discovered that the addition of tetraethyl lead to gasoline prevented engine “knocking.” GM named the substance Ethyl, and made no mention of lead in its reports and advertising. Oil companies and auto makers, especially GM,

Upper Case Editorial

which owned the patent jointly with Midgley, promoted the additive as a superior alternative to ethanol, on which there was very little profit to be made. In 1923, the American Chemical Society awarded Midgley the Nichols Medal for the Use of Anti-Knock Compounds in Motor Fuels. He then took a prolonged vacation in Miami to cure himself of lead poisoning.

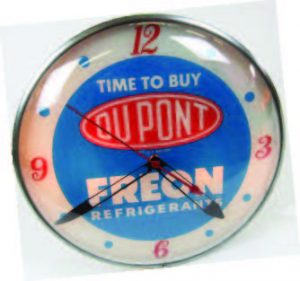

Later in the decade, Midgely was assigned to another GM division, Frigidaire. The modern, self-contained plug-in refrigerator had existed for almost two decades by then, but it used refrigerant chemicals that were not only toxic and corrosive, but occasionally explosive, including ammonia, chloromethane, propane, and sulfur dioxide. Midgely was tasked with finding a safer alternative. His research team incorporated fluorine into a hydro-carbon and synthesized dichlorodifluoromethane, the first chlorofluorocarbon (CFC). They named it Freon. Freon soon became the standard refrigerant, and was later used in aerosol spray cans and asthma inhalers (!) as a propellant. In 1937, The Society of Chemical Industry awarded Midgley the Perkin Medal (named after Henry Perkin, aka Mr. Mauveine) for his invention.

Upper Case Editorial

Though recognized as a brilliant chemist, Midgely can claim two of history’s most environmentally catastrophic inventions. The release of large quantities of lead into the atmosphere has been linked to long-term health problems, including neurological impairment in children and increased levels of violence and criminality in major population centers. The ozone-depleting and greenhouse gas effects of CFCs only became widely known 30 years after Midgely’s death. Author and scholar Bill Bryson summed Midgley up beautifully when he remarked that he possessed “an instinct for the regrettable that was almost uncanny.” Environmental historian J.R. McNeill said that Midgley “had more impact on the atmosphere than any other single organism in Earth’s history.”

BRIGHT, SHINY OBJECTS

Say what you will about the law of unintended consequences, but it is most definitely an equal-opportunity destroyer. But a good invention gone bad doesn’t always harm people. Sometimes it only demolishes an industry. In 1981, the compact disc hit record stores, the very thing it would inadvertently kill. The 5” CD replaced LP records and audio cassettes. Its size rendered music packaging unimportant, ending an era of album-cover art that brought some of the world’s most gifted artists and photographers into our homes. The quantum leap in audio quality CDs delivered—which did not erode over time as vinyl and magnetic tape did—fulfilled the promise of the early digital age.

For the next 20-or-so years, the CD was one of the best things that ever happened to the record industry. Baby Boomers, grown older and wealthier (and less interested in new music), were all too happy to re-purchase their favorite albums, even at double the price of the old LPs. Industry revenues soared. Record company executives, keen on generating foolproof profits, were delighted to sell their existing catalogs in a new package. At the same time, they also poured big money into recording contracts with established superstars and the overnight sensations created by that other thing that began in 1981, MTV. A lot of that cash came out of the budgets traditionally earmarked for promising acts that needed more career nurturing. They were put on starvation diets and pressured to produce hit videos instead of developing loyal audiences. The result was a mix of aging, bloated artists and one-hit wonders.

Photo by Santeri Vinamaki

I was a part of the industry during this period, having contributed to over 100 music videos as an assistant cameraman and camera operator from 1983 to 1995. I worked with Van Halen, Joe Jackson, Guns ’n Roses, Anita Baker, The Beastie Boys, Kenny G, Jazzy Jeff and The Fresh Prince, James Taylor, Willie Nelson and dozens more. Most of the shoots were great fun, 20-hour days notwithstanding. The few that were pure misery were mostly caused by despicable talent managers, producers and “A&R men” who would squeeze every last bit of production value out of both crewmembers and stage or location expenses. I was almost seriously injured (and possibly killed) more than a few times. On the continuous spectrum of business ethics, you can find promoters and managers of pop music artists somewhere south of people who look after the best interests of professional boxers.

Industry executives had been warned by their engineers since Day One that digitizing music in the form of a CD would, one day, mean a loss of control of their content. Although they had been reaping profits from digital technology for nearly two decades, they were still analog thinkers. The chickens came home to roost in 1999, when personal computers like the iMac, with plug-and-play capability, arrived. The new generation of desktops and laptops were configured with built-in Internet and drives that not only could play CDs but also “rip” them as MP3 files. The industry had reduced its product to digital data, and now anyone with a computer could take it for free. Even a novice user could become an audio engineer after a few days of online tutorial, ushering in an era of anonymous, shame-free theft through file-sharing sites like Napster

Music piracy, a minimal concern until then, cratered the industry. In the ensuing years, more than 370,000 jobs were eliminated. Music companies and artists lose more than$50 billion annually. A generation ago, bands toured to promote album sales, which climbed into the hundreds of thousands or even the millions. Today, they hit the road to survive, and for all but a fortunate few, music sales barely pay the catering bill.

Music piracy, a minimal concern until then, cratered the industry. In the ensuing years, more than 370,000 jobs were eliminated. Music companies and artists lose more than$50 billion annually. A generation ago, bands toured to promote album sales, which climbed into the hundreds of thousands or even the millions. Today, they hit the road to survive, and for all but a fortunate few, music sales barely pay the catering bill.

SHIFT F7…BANG!

If you could make almost anything—and I mean anything—from a chess piece to a heart valve to a gun, all by yourself, in your garage…what would you make? Today’s most intriguing wait-and-see invention has to be the 3D printer. Known as additive manufacturing (AM), this technology creates three-dimensional objects by successive layering of material under computer control. 3D printers use an Additive Manufacturing file (AMF) created with a 3D scanner, or a camera with special “photogrammetry” software, or a computer assisted design (CAD) package. The machine itself uses a number of different production technologies, which frankly are so beyond my personal knowledge that I can’t even begin to describe them: Binder Jetting, Directed Energy Deposition, Material Extrusion, Material Jetting, Powder Bed Fusion, Sheet Lamination and Vat Photopolymerization.

3D printers support almost 200 different materials in four basic categories: plastic, powder, resins, and “other.” Other can include titanium, stainless steel, bronze, brass, silver, gold, ceramics, chocolate, glass, concrete, sandstone and gypsum. Printers capable of producing household chemicals, pharmaceutical medications, and even living tissue cultures for transplant organs are currently in an experimental stage. Their developers envisage both industrial and domestic use for this technology, which would also enable people in remote locations to be able to produce their own medicine or replacement parts for other machines

Just as nobody could have predicted the profound cultural impact of the printing press in 1450, the steam engine in 1750, or the transistor in 1950, it is impossible to predict the impact of 3D printing. However, 3D printing makes it possible to produce single items for the same unit cost as producing thousands, thus undermining a foundational law of mass production: economy of scale. It will almost certainly have as profound an effect on the world as the advent of the production line and the factory did more than a century ago. Futurist Jeremy Rifkin has claimed that 3D printing marks the beginning of a third industrial revolution.

What will that revolution look like? By the end of the decade, you will be able to create many of the things that now require a special trip to a store on an affordable home 3D printer. Just download the file, push print. Sounds like fun, right? Well, consider the fact that in 2012 a Texas company posted design files for a fully functional plastic gun that could be produced by anyone with a 3D printer

Modern Readers

The U.S. Department of State made the company take down the file after several months, but if you learned anything from the CD story in this article, you know the genie was long out of the bottle by then

According to a memo released by Homeland Security and the Joint Regional Intelligence Center, “significant advances in 3D printing capabilities, availability of free digital 3D printable files for firearms components, and difficulty regulating file sharing may present public safety risks from unqualified gun seekers who obtain or manufacture 3D printed guns…[and] proposed legislation to ban 3D printing of weapons may deter, but cannot completely prevent their production. Even if the practice is prohibited by new legislation, online distribution of these 3D printable files will be as difficult to control as any other illegally traded music, movie or software files.”

The memo overlooks a key problem with 3D firearms. You could murder someone and then pop your gun into the microwave, melting any evidence of the crime. Who knows? Maybe the unintended consequence of 3D printing will be an entirely new branch of forensics. This could get interesting